AI Product Lifecycle

— AI Product Lifecycle, Computer Vision, Smart Camera, AI Model Deployment — 31 min read

Understanding the AI Product Lifecycle

The AI product lifecycle is a structured framework that guides teams from the initial concept of an AI solution to its deployment and ongoing improvement. This lifecycle ensures that AI products are not only technically sound but also aligned with user needs and business goals. Below, we delve into the stages of this lifecycle, narrating the journey of transforming a problem definition into a market-ready AI product.

Stage 1: Problem Definition1

Every successful AI product begins with a clear understanding of the problem it aims to solve. This stage involves identifying specific pain points that users experience and defining the scope of the solution. For instance, imagine a mid-sized e-commerce company struggling to manage a growing volume of customer inquiries. Their customer support team is overwhelmed, leading to long response times and dissatisfied customers.

Key Actions:

- Conduct surveys and interviews with customers to gather insights.

- Analyze customer service data to identify recurring issues.

Stage 2: Ideation2

Once the problem is clearly defined, the next step is ideation. This involves brainstorming potential AI-driven solutions that can address the identified pain points. In our example, the e-commerce company might consider developing an AI chatbot that can handle common inquiries, provide product recommendations, and assist with order tracking.

Key Actions:

- Organize brainstorming sessions with cross-functional teams.

- Evaluate the feasibility and impact of various ideas.

Stage 3: Data Collection3

With a potential solution in mind, the team must now gather relevant data to inform the development of the AI model. This data can include historical chat logs, customer queries, product information, and other interaction data. The quality and quantity of data collected will significantly impact the performance of the AI model.

Key Actions:

- Identify and source existing datasets.

- Ensure data privacy and compliance with regulations.

Stage 4: Model Development4

Next, the team transitions to model development. This stage involves creating a prototype of the AI product and training the model using the collected data. For the e-commerce chatbot, developers will utilize natural language processing (NLP) techniques to train the chatbot on understanding customer queries and generating appropriate responses.

Key Actions:

- Develop a minimum viable product (MVP) that demonstrates core functionalities.

- Train and test the model iteratively to improve accuracy.

Stage 5: Testing5

Before launching the AI product, rigorous testing is essential to ensure its reliability and effectiveness. In this stage, the e-commerce chatbot undergoes various forms of testing, including unit testing, user acceptance testing, and performance testing. This allows the team to identify and address any issues that may arise during interactions with real users.

Key Actions:

- Conduct beta testing with a select group of customers.

- Gather feedback and refine the product based on test results.

Stage 6: Deployment6

Once testing is complete and the chatbot performs well, it’s time for deployment. The team launches the AI chatbot on the company’s website and mobile app, integrating it seamlessly with existing customer service channels. This stage requires careful planning to ensure that the infrastructure can handle user traffic, especially during peak shopping seasons.

Key Actions:

- Monitor the deployment for any technical issues.

- Ensure that customer service representatives are trained to work alongside the chatbot.

Stage 7: Monitoring & Feedback7

Post-deployment, continuous monitoring is vital to ensure the AI product is functioning as intended. The team tracks key performance metrics, such as response accuracy and user satisfaction. Regularly soliciting feedback from customers allows for real-time adjustments and improvements.

Key Actions:

- Implement analytics tools to monitor interactions and gather data.

- Set up channels for customer feedback to inform future iterations.

Stage 8: Iteration8

The AI product lifecycle is not linear; it involves an iterative process. Based on monitoring and feedback, the team continuously refines the chatbot. This may include retraining the model with new data, expanding its capabilities, or enhancing its understanding of customer intent.

Key Actions:

- Conduct regular updates and improvements based on data insights.

- Foster a culture of continuous learning and adaptation within the team.

Case Study - Waste Monetization System

The Waste Monetization System is an innovative edge-enabled AI solution designed to enhance waste management in a manufacturing plant producing materials such as wooden stands, plastic spools, carton boxes, copper stands, steel trolleys, and plastic bags.

Business Objective

The primary objective of the Waste Monetization System is to enhance the efficiency and accuracy of scrap material identification and logging within the production facility. By automating the process, the system aims to:

- Reduce Manual Labor: Minimize the reliance on manual entry for scrap identification and logging, freeing up personnel for more value-added tasks.

- Increase Accuracy: Achieve human-level accuracy in scrap identification to reduce errors and ensure accurate tracking of materials.

- Optimize Waste Management: Improve the overall waste monetization process, enabling the plant to maximize revenue from scrap materials through efficient sorting and processing.

Success Criteria

To measure the success of the Waste Monetization System, the following criteria will be established:

Let's Now discuss each stage

- Identification Accuracy: Achieve at least 95% accuracy in scrap material recognition by the AI system.

- Processing Speed: Reduce the average time taken to log scrap entries from the current manual process by at least 50%.

- Operational Uptime: Ensure the system operates 24/7 with minimal downtime for maintenance or troubleshooting.

- User Satisfaction: Obtain a user satisfaction score of 85% or higher from dispatch personnel interacting with the system.

Stage 1: Problem Definition (Challenges to be Solved)

For the Waste Monetization System to function effectively, several AI challenges must be addressed, particularly considering that the system operates continuously (24/7) in a dynamic environment:

1. Variable Lighting Conditions:

- Day/Night Variability: The system must operate effectively in both bright daylight and dimly lit conditions, impacting visibility and image quality.

- Shadows and Reflections: Fluctuations in lighting can create shadows or reflections that hinder the camera's ability to accurately identify scrap materials.

2. View and Placement Variability:

- Orientation Issues: Scrap materials can be placed at various angles, making it difficult for the camera to correctly identify them.

- Positioning on the Weighing Platform: The position where the scrap is placed may obstruct the camera’s field of view, leading to potential misidentification.

3. Obstructions in Field of View:

- Interference from Personnel: Workers may inadvertently block the camera's view while performing their duties, affecting the system's ability to capture accurate data.

- Physical Objects: Other equipment or materials near the weighing platform can obstruct the camera, leading to incomplete data logging.

4. Energy Optimization:

- Continuous Operation: Maintaining constant camera operation can lead to excessive energy consumption, especially in a 24/7 operational environment.

- Activation Triggers: The challenge lies in determining when the camera should be activated to balance energy efficiency with the need for real-time monitoring.

5. System Integration:

- Compatibility with Existing Infrastructure: Ensuring that the new AI device can seamlessly integrate with the current PLC and weighing machine without disrupting ongoing operations.

- Data Flow and Communication: Establishing reliable data exchange protocols between the AI system and existing software solutions for effective real-time monitoring and logging.

6. Data Diversity and Quality

- Variety of Scrap Types: The AI system must be trained to recognize a wide range of scrap materials (wood, plastic, metal, etc.), which may require diverse datasets.

- Quality of Data: Variability in data quality (e.g., unclear images, inconsistent labels) can affect model training and overall performance.

7. Real-Time Processing

- Speed of Identification: The system must identify and log scrap materials quickly enough to not delay the overall scrap handling process.

- Latency Issues: Ensuring low latency in data processing and logging to maintain operational efficiency during peak times.

8. User Interface and Experience

- Ease of Use for Personnel: The interface must be intuitive for dispatch staff who will interact with the system daily.

- Training Requirements: Adequate training and support will be necessary to ensure that personnel can effectively use the new system without disruptions.

Stage 2: Ideation (Solution Approach)

The ideation process for the Waste Monetization System begins by identifying specific needs in the manufacturing industry for improving waste management. Here’s a step-by-step breakdown of how this idea would evolve into a concrete solution:

1. Problem Identification

- Observation: Manufacturing plants often generate various types of scrap materials, such as wood, metal, and plastic, which need to be disposed of or recycled. However, manual identification, tracking, and categorization of waste are time-consuming and prone to error.

- Core Problem: There is no streamlined way to identify and log different types of waste, leading to inefficiencies, increased costs, and lost opportunities for recycling or repurposing valuable scrap.

2. Goal Definition

- The primary goal of the Waste Monetization System is to automate the identification and tracking of scrap materials in real time, optimizing waste processing, recycling, and overall plant efficiency. Secondary goals include reducing disposal costs, minimizing environmental impact, and promoting sustainability in manufacturing.

3. Solution Brainstorming

- AI-Powered Solution: Use computer vision and AI to automate the identification of different waste materials, reducing dependency on manual processes.

- Real-Time Data Logging: Implement real-time data logging for scrap materials identified, centralizing data for analysis, regulatory reporting, and process optimization.

- Edge Processing: To avoid latency and ensure immediate feedback, the AI model should run on an edge device, capturing and analyzing data directly at the source (e.g., production line).

- Feedback Mechanism: Include a feedback system allowing plant operators to provide input on misclassified scrap, continuously improving the model’s accuracy.

4. Use Case Development

- Primary Use Case: Automated waste classification and logging on the production floor, where materials like plastic, wood, and metal are generated as scrap.

- Secondary Use Case: A real-time dashboard for management and plant operators to view waste data, understand production waste patterns, and take action for cost savings or recycling.

5. Feasibility Assessment

- Technical Feasibility: Assess the feasibility of using computer vision and AI for identifying different waste types, requiring high-quality images and AI model training to achieve accurate classification.

- Resource Requirements: Identify hardware requirements (e.g., smart cameras, edge devices) and estimate costs associated with data storage, processing, and ongoing model maintenance.

- Scalability: Ensure that the system can scale with different waste types and adapt to new materials as the plant evolves.

6. Designing Key Components

- Smart Camera System: Design a system where smart cameras capture images of waste materials and send them to an edge device for processing.

- Edge AI Model: Develop a computer vision model capable of identifying key materials with accuracy and adaptability.

- Centralized Database: Set up a database to store waste data, accessible for reporting and analysis. User Feedback Mechanism: Implement an interface for plant operators to provide feedback on the model’s classification performance.

7. Validation and Stakeholder Engagement

- Stakeholder Buy-In: Present the idea to key stakeholders, such as plant managers and sustainability officers, highlighting benefits like cost reduction, operational efficiency, and improved sustainability.

- Pilot Testing: Conduct small-scale tests to validate the system’s accuracy, ease of use, and impact on waste management processes.

8. Developing a Minimum Viable Product (MVP)

- Based on the ideation and validation phases, create an MVP of the Waste Monetization System, incorporating core functionalities like waste classification, data logging, and operator feedback. This ideation process ensures that the Waste Monetization System is both feasible and aligned with the operational needs of a manufacturing environment, focusing on efficiency, cost-effectiveness, and sustainability.

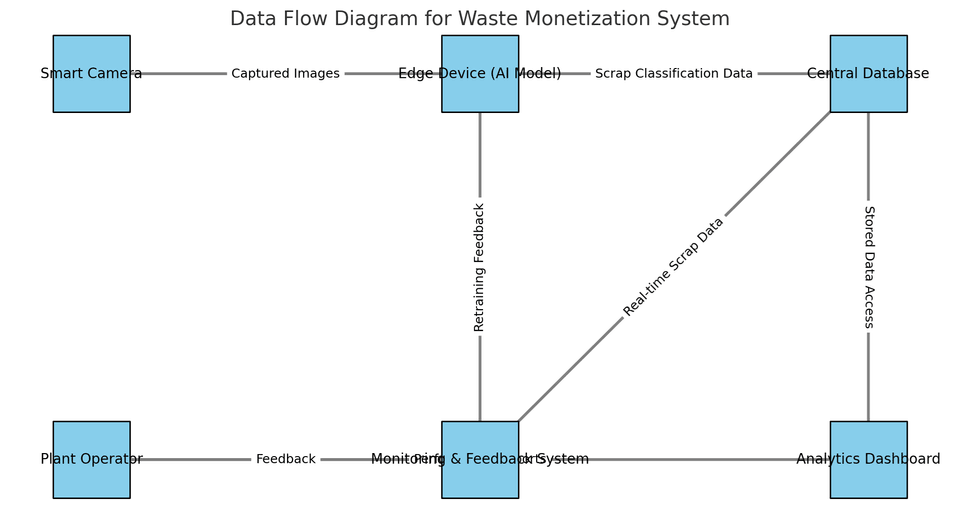

Data Flow Diagram (DFD) for the Waste Monetization System. This diagram represents the flow of data among the core components of the system:

- Smart Camera: Captures images of scrap materials and sends them to the edge device.

- Edge Device (AI Model): Processes images, classifies scrap, and sends classification data to the central database.

- Central Database: Stores classified scrap data, making it accessible for real-time monitoring and analysis.

- Plant Operator: Provides feedback on the system’s performance and misclassifications.

- Monitoring & Feedback System: Analyzes data for monitoring purposes, using operator feedback to identify areas for model retraining and improvement.

- Analytics Dashboard: Pulls data from the central database for reporting, generating performance reports and visualizations for operators and stakeholders.

Each component interacts to support efficient scrap classification, data storage, monitoring, and ongoing system improvement.

Stage3: Data Collection

Data collection is crucial for the Waste Monetization System as it enables accurate identification and categorization of scrap materials in real-time. By systematically gathering data through smart cameras and edge-enabled AI devices, the system can enhance decision-making processes, optimize waste management practices, and improve operational efficiency. This data-driven approach allows for precise tracking of scrap generation, enabling better inventory management and resource allocation. Ultimately, effective data collection supports sustainability goals by maximizing the value extracted from waste materials, reducing environmental impact, and fostering a circular economy within the manufacturing process.

Data Collection Methods:Volume of Data:

- Image Data: Collect images of scrap materials in various conditions using high-resolution cameras. Data should include multiple angles, orientations, and lighting conditions.

- Sensor Data: Gather data from the existing weighing machine to correlate weights with identified scrap types. This could include timestamps and specific scrap IDs for tracking.

Ensuring Data Quality:

- Aim to collect thousands of images for each type of scrap material (e.g., wooden stands, plastic spools, etc.) to ensure diversity in training. A target of 5,000–10,000 images per material type could provide a robust dataset.

- Data Validation: Implement a data validation process where collected images are reviewed for clarity and relevance. This includes checking for images that are out of focus or contain irrelevant materials.

- Diversity Checks: Ensure that the dataset covers a wide range of scenarios, including varying lighting conditions and placements on the weighing platform. This can be accomplished by conducting multiple data collection sessions at different times of day and in various operational scenarios.

Stage 4: Model Development

Model Development is vital for the Waste Monetization System as it involves creating robust AI models that can accurately analyze and classify scrap materials captured by smart cameras. This stage focuses on selecting appropriate algorithms, training the models on diverse datasets, and fine-tuning them to ensure high precision and recall rates in identifying various types of waste. A well-developed model not only enhances the system's ability to recognize and log scrap effectively but also contributes to continuous improvement through feedback mechanisms. By leveraging advanced analytics and real-time data, this stage ultimately enables better decision-making, optimizes recycling processes, and increases the overall profitability of waste monetization efforts.

Model SelectionAddressing Challenges: Variable Lighting Conditions:

- Type of Model: A Convolutional Neural Network (CNN) is the most suitable model for this application due to its proficiency in image classification tasks, especially in recognizing patterns and features in visual data.

- CNNs are designed to process pixel data and automatically learn hierarchical feature representations from images. This makes them ideal for identifying different scrap materials based on their unique visual characteristics.

- Their ability to handle spatial hierarchies enables the model to learn features such as edges, textures, and shapes, which are crucial for accurate identification in varied conditions.

Addressing Challenges: View and Placement Variability:

- Data Augmentation: To counteract the effects of different lighting scenarios, the model can be trained using data augmentation techniques. This includes altering brightness, contrast, and applying filters to simulate various lighting conditions.

- Robust Training Dataset: Ensure the training dataset includes images captured under different lighting conditions to enhance the model's ability to generalize and maintain performance across diverse environments.

- Image Rotation and Flipping: Use augmentation methods that rotate or flip images to provide the model with examples of materials in various orientations. This helps the model learn to recognize objects regardless of how they are placed on the weighing platform.

- Multi-View Training: Incorporate images from multiple angles to improve the model’s robustness against different perspectives and placements of scrap materials.

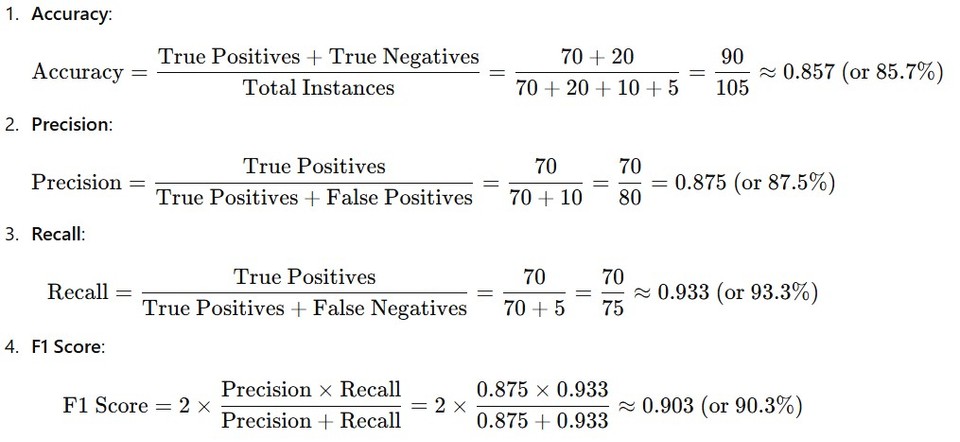

Measuring Model Performance

Performance Metrics: Utilize standard metrics such as Accuracy, Precision, Recall, and F1 Score to evaluate the model's performance.

- Accuracy: The percentage of correctly identified scrap materials out of the total. Precision: The ratio of true positive predictions to the total predicted positives, indicating the model’s reliability.

- Recall: The ratio of true positives to the actual positives, demonstrating the model's ability to identify all relevant instances.

- F1 Score: The harmonic mean of precision and recall, providing a balanced measure of the model's performance.

Example Calculation of Performance Metrics Let's consider the following confusion matrix data for our AI model assessing scrap material identification:

| Predicted Positive | Predicted Negative | |

|---|---|---|

| Actual Positive | 70 (True Positive) | 5 (False Negative) |

| Actual Negative | 10 (False Positive) | 20 (True Negative) |

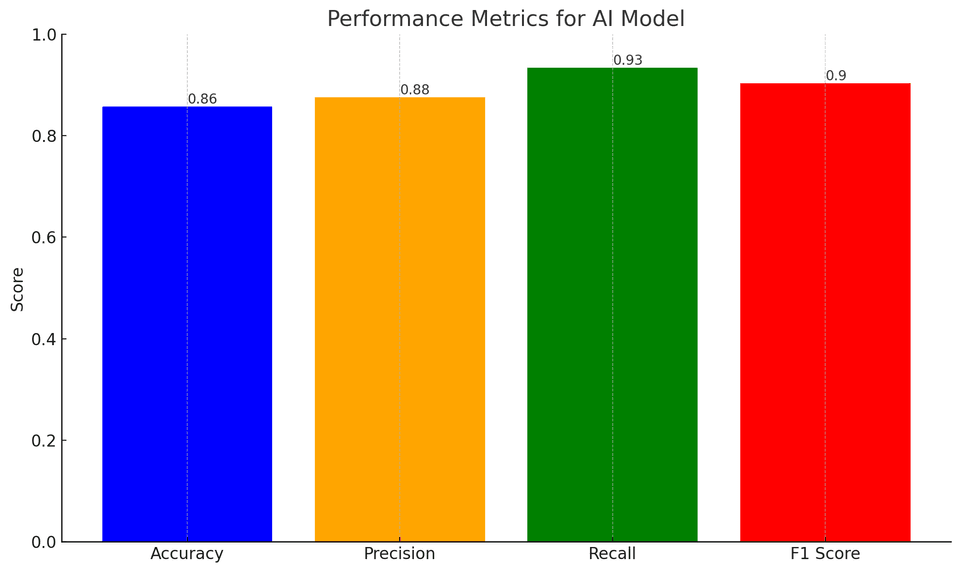

The bar graph below visually represents the calculated performance metrics for the AI model, illustrating how well the model performs in identifying scrap materials. Each metric is plotted, allowing for a quick comparison of the model’s strengths and weaknesses.

Validation Dataset:

Use a separate validation dataset (20-30% of total images) to evaluate the model's performance during testing, ensuring it has not seen this data before training.

Further Improvements

Continuous Learning: Implement a feedback loop where the model can be retrained periodically using new data collected from real-world operations. This helps the model adapt to changing conditions or variations in scrap materials.

Error Analysis:

Conduct regular error analysis to identify common misclassifications. Understanding why certain scrap materials are misidentified can inform further model refinements and data collection strategies.

User Feedback: Integrate feedback from dispatch personnel regarding model performance in the field. Their insights can provide valuable information for ongoing adjustments and improvements to the system.

Stage 5: End-to-End System Testing

Conducting end-to-end system testing is crucial to ensure that the Waste Monetization System meets the client's objectives and operates effectively. This process involves validating the entire workflow, from data collection to scrap identification, and includes testing all integrated components such as the smart camera, weighing machine, and central database. Below is a detailed approach to conducting this testing:

1. Test Planning

- Define Objectives: Clearly outline the goals of the testing phase, such as verifying system accuracy, efficiency, and integration functionality.

- Identify Test Cases: Develop test cases based on the requirements and specifications. This includes functional, performance, and user acceptance testing (UAT) scenarios.

- Set Success Criteria: Establish criteria for success based on client objectives, such as accuracy thresholds for scrap identification, response times, and energy efficiency metrics.

2. Component Testing

Individual Component Tests: Before full integration, test each system component separately to ensure they function correctly:

- Smart Camera: Test image capture, clarity assessment, and classification accuracy using various lighting and obstruction scenarios.

- Weighing Machine: Validate the accuracy of weight measurements and the proper functioning of the PLC that triggers the camera.

- Central Database: Ensure that data logging, storage, and retrieval processes function as intended.

3. Integration Testing

- Test Interactions Between Components: Verify that all components work together seamlessly:

- Ensure the camera activates correctly based on signals from the weighing machine.

- Validate that images are passed from the camera to the Multi-Class Image Classification Model without errors.

- Confirm that the scrap identification results are logged accurately in the central database.

- Simulate Real-World Scenarios: Conduct integration tests in conditions that mimic actual operational environments, including varying lighting and potential obstructions.

4. End-to-End Testing

Full System Workflow: Execute test scenarios that simulate the entire process from start to finish:

- Scenario 1: Place scrap on the weighing machine to trigger the camera. Test whether the camera captures the image, processes it, and sends the identification results to the database.

- Scenario 2: Test cases where the field of view is obstructed, and verify that alarms are raised, and notifications are triggered.

- Scenario 3: Test the system under various operational conditions, such as different types of scrap materials and placements, to ensure consistent identification accuracy.

5. Performance Testing

- Evaluate Response Times: Measure how quickly the system responds to weight detection and image processing.

- Assess System Load: Test how the system performs under peak load conditions, such as when multiple scrap pieces are processed simultaneously.

6. User Acceptance Testing (UAT)

- Involve Stakeholders: Engage end-users and stakeholders to validate the system meets their expectations and operational requirements.

- Feedback Collection: Gather feedback on system usability, performance, and any additional requirements or enhancements needed.

7. Documentation and Reporting

- Record Results: Document all test cases, results, and any issues encountered during testing.

- Review and Analysis: Analyze the data collected to identify patterns, areas for improvement, or necessary adjustments.

- Final Report: Prepare a comprehensive report summarizing the testing process, results, and recommendations for further action or modifications.

8. Validation Against Client Objectives

- Check Against Success Criteria: Evaluate the testing results against the established success criteria and client objectives. Ensure that the system meets the required accuracy, efficiency, and operational functionality.

Stage 6: Deployment

Model Deployment is essential for the Waste Monetization System as it involves integrating the developed AI models into the operational environment, ensuring they function effectively in real-time scenarios. This stage ensures that the models are seamlessly incorporated into the existing infrastructure, allowing for immediate application in scrap identification and logging processes. Successful deployment enables continuous monitoring and evaluation of the model's performance, facilitating timely updates and optimizations based on real-world data. By operationalizing the model, the Waste Monetization System enhances its efficiency, scalability, and responsiveness, ultimately driving improved waste management practices and maximizing resource recovery in the manufacturing process.

Running the Model on Raspberry Pi

Model Optimization:

Before deploying the model, it will be optimized for the Raspberry Pi environment. This may involve techniques such as quantization to reduce the model size and improve inference speed while maintaining acceptable accuracy. Libraries like TensorFlow Lite or OpenVINO can be used for this purpose.

Dependencies:

- The optimized model will be deployed on a Raspberry Pi equipped with a camera and connected to the existing infrastructure. The model will be loaded into the Raspberry Pi’s memory for inference.

- Ensure that all necessary libraries (like OpenCV for image processing and TensorFlow Lite for model inference) are installed on the Raspberry Pi.

Ensuring Real-Time Performance

Edge Computing:Efficient Code:

- By running the model on the Raspberry Pi, we leverage edge computing, allowing for data processing to occur locally without the need for constant communication with a remote server. This significantly reduces latency.

Asynchronous Processing:

- The model will be executed in a continuous loop that captures images from the camera at a predetermined interval (e.g., every second) while processing these images in real time to identify scrap materials.

- Use multi-threading or asynchronous processing to handle image capture, model inference, and data logging simultaneously. This allows the Raspberry Pi to manage tasks without bottlenecks.

System Longevity Maintenance

Regular Updates:Monitoring System Health:

- Schedule periodic updates for the model, which can be implemented remotely via an internet connection. This includes retraining the model with new data and applying software updates for bug fixes and improvements.

Cooling Solutions:

- Implement monitoring scripts that check the system’s health, ensuring that the camera, model, and weighing machine are operational. Alerts can be set up to notify the maintenance team in case of any failures.

- If the Raspberry Pi is operating in a hot environment, employ cooling solutions (such as heat sinks or fans) to prevent overheating, which can affect performance and lifespan.

Interfacing with Central Database

Data Logging:Database Integration:

- When scrap is placed on the weighing machine, the Raspberry Pi will capture an image, perform inference to identify the scrap type, and retrieve the weight from the weighing machine.

- After processing, the Raspberry Pi will log the scrap type, weight, and timestamp into a central database. This can be accomplished via REST APIs or direct database connections using protocols like MQTT or HTTP. Ensure that the database is secure and has appropriate redundancy measures in place.

Optimization: Integration of the Weighing Machine for Energy Efficiency

In the Waste Monetization System, integrating the weighing machine with the smart camera plays a crucial role in optimizing energy consumption while maintaining effective operational functionality. The camera's activation can be triggered based on the weighing machine's status, ensuring that the camera operates only when necessary. Here’s how this integration can be structured:

Integration Workflow 1. Weighing Machine Connection:2. Trigger Mechanism:

- The weighing machine is connected to the central control system, which monitors its operational state.

- A programmable logic controller (PLC) or similar control system interfaces with the weighing machine to receive data regarding its status, such as when it is in use or idle.

3. Energy-Saving Strategy:

- The smart camera is designed to remain in a low-power standby mode until it receives a signal from the weighing machine indicating that scrap material is present for weighing.

- When the weighing machine detects that scrap has been placed on it, it sends a signal to the smart camera to power on and begin capturing images for analysis.

4. Operational Flow:

- By activating the camera only when needed (i.e., when scrap is being weighed), the system minimizes unnecessary power consumption.

- This approach significantly reduces energy usage, as the camera is one of the components that may consume considerable power when actively processing images.

5. Continuous Monitoring:

- When the operator places scrap material on the weighing machine:

- The weighing machine detects the weight and sends a trigger signal to the smart camera.

- The camera powers on and captures images of the material placed on the scale.

- The image is then processed by the Multi-Class Image Classification Model to assess the field of view's clarity.

- After the identification process is complete and the data logged, the camera can automatically return to its low-power standby mode until the next trigger from the weighing machine.

- In addition to energy efficiency, this setup allows for continuous monitoring of the system. The camera remains ready to operate when the weighing machine is active, ensuring that all scrap materials are identified without delay.

Benefits of Integration

Energy Efficiency:

Extended Equipment Lifespan:

- The camera only operates when necessary, drastically reducing power consumption during idle periods.

Improved Response Time:

- Reducing the operational time of the camera can lead to less wear and tear, ultimately extending its lifespan.

Cost-Effective Operation:

- The quick triggering mechanism ensures that the system can promptly respond to scrap placement, improving overall operational efficiency.

- By optimizing energy usage and equipment operation, the system can achieve cost savings, which can be significant over time.

Stage 7: Monitoring and Feedback (Handling Failure Mechanisms)

To ensure the robustness and reliability of the Waste Monetization System, it's essential to have a comprehensive strategy for handling failure mechanisms across various components, including AI models, database integration, weighing machines, and hardware for sound and light alerts. Here’s how we approach failure management:

1. AI Model Failures

Detection and Response:Logging and Analysis:

- Monitoring and Alerts: Implement monitoring tools to continuously assess the performance of AI models. Set thresholds for accuracy and processing time, so if a model's performance drops below a predefined level, an alert is triggered.

- Fallback Mechanism: If the AI model fails to classify an image or identify scrap, the system can revert to a manual mode where operators can intervene and classify the material, ensuring that operations can continue without disruption.

- Error Logging: Record instances of model failures, including the specific conditions (e.g., lighting, occlusion) that led to the failure. This data can be invaluable for retraining or refining the model.

- Periodic Review: Regularly analyze logged errors to identify patterns that may require model retraining or enhancements.

2. Database Integration Failures

Connection Handling:Graceful Degradation:

- Retry Logic: Implement automatic retry mechanisms for database connections. If a connection fails, the system will attempt to reconnect a predetermined number of times before escalating the issue.

- Data Caching: Utilize temporary storage (cache) to hold data in the event of a database failure. This allows the system to continue operations while queuing data to be logged once the database connection is restored.

- Alert Notifications: Notify operators via sound or visual alerts if the database integration fails, prompting immediate investigation.

- Fallback Procedures: If the database is unreachable, implement a local logging mechanism to store data until the connection is reestablished.

3. Weighing Machine Failures

System Redundancy:Failure Detection:

- Backup Weighing Device: Consider integrating a secondary weighing machine as a backup to ensure continuous operation if the primary device fails.

- Health Checks: Regularly perform health checks on the weighing machine to ensure it is functioning correctly. This can include checks for calibration and error rates.

- Operator Alerts: If the weighing machine fails to produce valid readings, an alert will be sent to operators to examine the equipment.

4. Hardware Failures (Sound and Light Alerts)

Monitoring and Diagnostics:Failure Response:

- Regular Testing: Implement routine tests for sound and light alert systems to ensure they are operational. This can include automated tests that simulate alerts.

- Redundancy: Use multiple alert systems to ensure redundancy. For example, if a light fails, an audio alert will still be activated.

- Immediate Notifications: If an alert system fails, operators will receive notifications through the main control panel, prompting immediate investigation and repair.

- User Feedback Loop: Incorporate user feedback mechanisms to report failures in the alert systems, ensuring that all operators can contribute to maintaining system integrity.

5. Comprehensive Testing and Maintenance

Routine Maintenance:Testing for Failures:

- Schedule regular maintenance checks for all components of the Waste Monetization System. This includes software updates, hardware inspections, and performance assessments of AI models.

- Conduct failure mode and effects analysis (FMEA) to anticipate potential failure points in the system. Create and test contingency plans for each identified failure mode.

Stage 8: Iteration

In the AI product lifecycle, Stage 8: Iteration is an ongoing, cyclical process where the AI product is refined and improved based on data from the Monitoring phase. For the Waste Monetization System, iteration means consistently refining the model and the system’s features based on real-world performance insights, operator feedback, and evolving manufacturing needs. Here’s how iteration is applied to this specific AI product:

1. Data-Driven Model Improvement

- Analysis of Monitoring Data: Use data collected in the monitoring stage, such as identification accuracy, error rates, and operator feedback, to identify specific areas needing improvement.

- Model Retraining: When model performance begins to degrade (e.g., due to data drift or changes in the types of waste), initiate retraining using a more recent dataset from the manufacturing environment. This includes diverse and new types of scrap materials, potentially improving the model’s adaptability.

- Algorithm Adjustment: Adjust the AI algorithm to better distinguish materials that may look similar (e.g., certain plastics vs. metal). Experiment with various model architectures or hyperparameters to improve robustness.

2. Refining Identification Accuracy

- Error Analysis: Conduct a systematic analysis of common errors, such as frequent false positives or false negatives for specific materials. This could include misidentified plastic versus metal scraps or mistaken identification of recyclable materials as waste.

- Feature Engineering: Modify or add new features to the model that better capture the distinguishing characteristics of different materials. For instance, integrating texture or color analysis might improve classification accuracy for certain types of waste.

3. Incorporating Feedback from Operators

- Feedback Loops: Gather continuous feedback from plant operators on the system’s accuracy and usability. Operators might report specific scenarios where the model fails, such as difficulty identifying oily or dusty materials.

- User Interface (UI) Enhancements: Update the UI based on user feedback, making it easier for operators to input corrections when the system misclassifies an item. This will provide valuable labeled data for future retraining.

- Enhanced Training with Edge Cases: Create a focused dataset of these edge cases and retrain the model to improve handling of challenging scenarios reported by the operators.

4. Expanding Functionality and Adaptability

- New Waste Types and Categories: If the plant begins producing new materials or types of scrap, update the model to identify these new waste categories accurately. For instance, if composite materials or a new grade of plastic are introduced, these should be integrated into the system’s identification capabilities.

- Multi-Modal Sensing: Consider integrating additional sensor data (e.g., weight or chemical composition data) alongside the camera input, if necessary, to improve identification accuracy for materials that are difficult to distinguish visually.

5. Testing and Validation

- A/B Testing: Implement A/B testing where two or more model versions are run simultaneously on different scrap materials to compare performance and validate improvements.

- Real-World Simulation: Run simulated trials to test how the model performs in various conditions, like low-light settings, high scrap volumes, or when materials are partially obstructed. Make adjustments based on these tests to ensure the system remains robust in dynamic production environments.

6. Continuous Improvement of the Edge Hardware

- Hardware Upgrades: As the model evolves and requires more processing power, consider upgrading edge devices or cameras to enhance performance and allow for more complex models.

- Optimization for Latency Reduction: Focus on optimizing the edge device’s processing capabilities to reduce latency, enabling real-time scrap identification even during peak operational hours.

7. Documentation and Communication of Changes

- Change Logs and Version Control: Document all changes made to the model and system, including algorithm tweaks, dataset updates, and hardware modifications. This provides a clear history of the system’s evolution, crucial for tracking improvements and ensuring continuity.

- Stakeholder Updates: Regularly inform stakeholders (e.g., plant management and operators) about new model versions, system changes, and improvements in identification accuracy. This promotes transparency and helps in gaining user trust and engagement.

8. Automated Iteration Pipeline

- CI/CD Pipeline for Machine Learning (MLOps): Implement a continuous integration/continuous delivery (CI/CD) pipeline that automates retraining, testing, and deployment of model updates. This allows for seamless iteration and reduces downtime in production.

- Model Versioning: Use model versioning to keep track of different versions deployed in the plant, allowing easy rollback if a new model version performs worse than its predecessor.

9. Exploring Advanced AI Techniques

- Transfer Learning: Experiment with transfer learning to leverage models pre-trained on similar datasets, which could speed up training time and improve model accuracy for scrap identification tasks.

- Reinforcement Learning for Adaptive Improvement: Investigate reinforcement learning methods to enable the system to learn continuously from real-time feedback, helping it dynamically improve without requiring constant manual intervention.

Conclusion

In conclusion, the Waste Monetization System exemplifies how AI-driven waste management solutions can transform manufacturing efficiency and sustainability. Through a structured AI product lifecycle, including iterative improvements, the system can adapt to real-world challenges, accurately identify diverse types of waste, and evolve alongside the plant's changing needs. The continuous cycle of monitoring, gathering feedback, retraining, and enhancing system functionalities ensures that this AI product not only maintains high performance but also drives meaningful cost savings and environmental benefits. This approach to AI product development showcases the value of a responsive, data-driven iteration process in creating impactful, scalable AI solutions.

References

Footnotes

-

Cooper, R. G. (1990). "Stage-Gate Systems: A New Tool for Managing New Products." Business Horizons, 33(3), 44-54. ↩

-

Dorst, K. (2015). "Frame Innovation: Create New Thinking by Design." The MIT Press. ↩

-

Kelleher, J. D., & Tierney, B. (2018). "Data Science: A Practical Introduction to Data Science." The MIT Press. ↩

-

Goodfellow, I., Bengio, Y., & Courville, A. (2016). "Deep Learning." The MIT Press. ↩

-

Paul, R. J., & Hovey, J. (2014). "The importance of software testing." IEEE Software, 31(1), 78-81. ↩

-

Hüttermann, M. (2012). "DevOps for Developers." Springer. ↩

-

Kim, G., Debois, P., & Willis, D. (2016). "The DevOps Handbook: How to Create World-Class Agility, Reliability, & Security in Technology Organizations." IT Revolution Press. ↩

-

Ries, E. (2011). "The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses." Crown Business. ↩